Finally a proper vertical tabs option!

Personal preference ofc but after trying it on a whim I can’t go back.

Tree Style Tabs, Sidebery, or similar are must-haves. I do try to clean up my tabs regularly, but I almost always have more open that can conveniently be displayed in a horizontal tab bar.

Vertical screen real estate is at a higher premium in general on desktop, anyway. No point in keeping my browser at the full width of my screen when most sites adamantly refuse to use the space anyway (case in point: Lemmy).

Yeah I hopped back over from Edge when the manifest v3 stuff came out, and the two main things I miss are proper profile management and vertical tabs - I’ve been using https://codeberg.org/ranmaru22/firefox-vertical-tabs to get around it currently, but having a native implementation to both issues will be a massive (and recently rare) Firefox W.

We don’t need AI in a browser.

Companies need to stop shoving AI into everything

If it’s using a local model like it says I think this is fine:

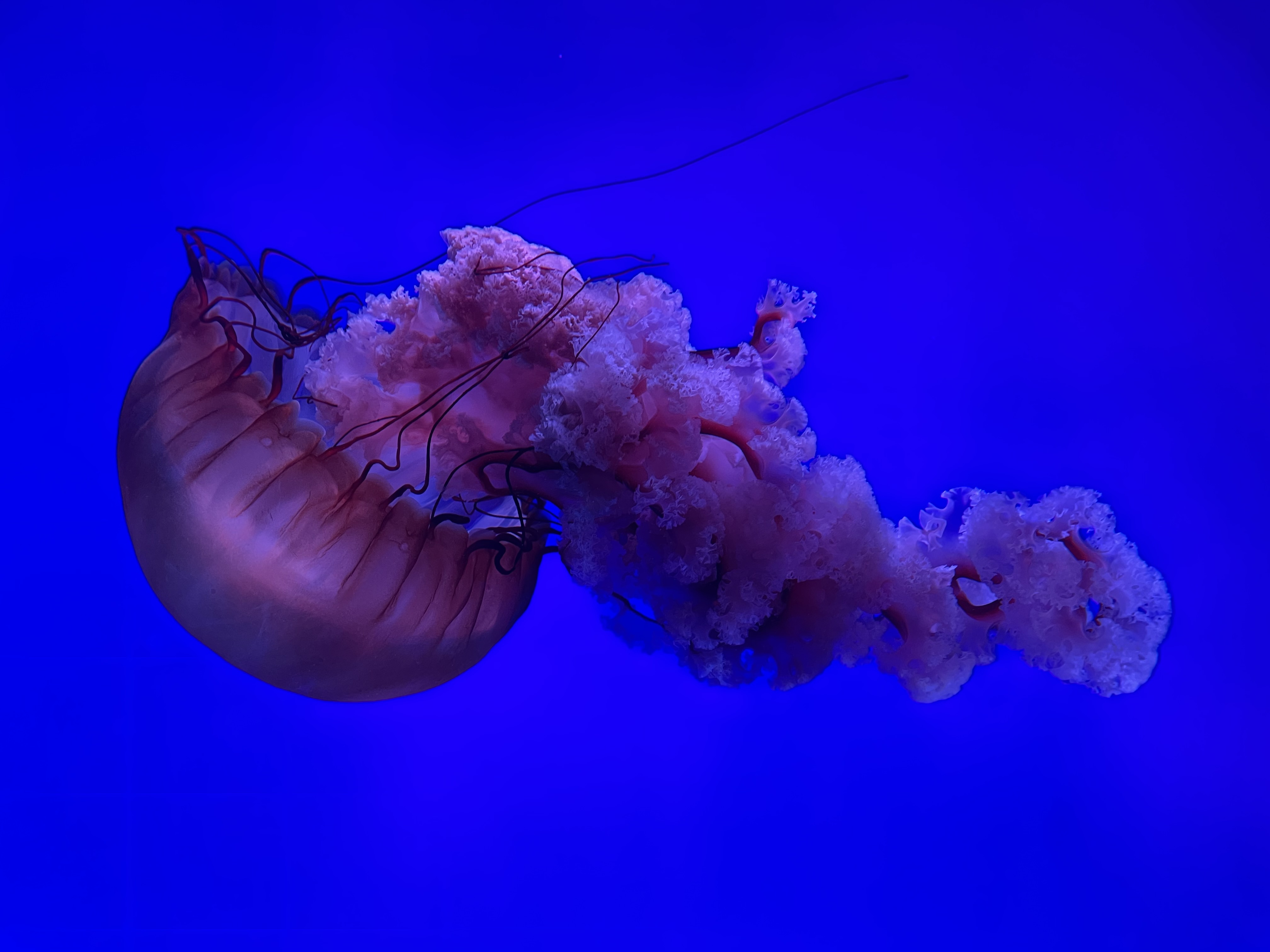

We’re looking at how we can use local, on-device AI models – i.e., more private – to enhance your browsing experience further. One feature we’re starting with next quarter is AI-generated alt-text for images inserted into PDFs, which makes it more accessible to visually impaired users and people with learning disabilities.

Did you read more than just the three words naming the feature? Their use case is actually smart, and could potentially help users a lot.

The recent addition of local in-browser website translation is an awesome feature I’ve missed for many years. The only alternatives I’ve found previously were either paid or Google Translate plugins. This translation feature is an example of an AI feature they’ve added.

Native Tab Grouping

I’m old enough to remember previous native tab grouping.

I can’t see how AI can’t be done in a privacy-respecting way [edit: note the double negative there]. The problem that worries me is performance. I have used texto-to-speech AI and it absolutely destroys my poor processors. I really hope there’s an efficient way of adding alt text, or of turning the feature off for users who don’t need it.

If it runs locally then no data ever leaves your system, so privacy would be respected. There are tons of good local-only LLMs out there right now.

As far as performance goes, current x86 CPUs are awful, but stuff coming out from ARM and likely from Intel/AMD in the future will be much better at running this stuff.

Not AI! Please!

I swear, tomorrow I am finding AI in the goddamn toilet paper!deleted by creator

But but, AI bad!

Is there a community where we can post whatever the popular opinion happens to be at the time? A sister community for !unpopularopinion@lemmy.world seems to be increasingly necessary.

I hate to break it to you, but right now, AI is being pushed by Tesla, Microsoft, Apple, Google. Pretty much every major megacorporation.

The environmental impacts are staggeringly horrible.

But sure, AI good.

That’s pretty damn awesome

MS Word has this feature and it’s absolutely terrible. 508-compliant alt-text, which were required to include in documents we publish at work, require a couple of sentences of explanation. Word uses like 3 words to describe an image.

deleted by creator

It’s just another feature that needs to be turned off on first start.

Why?

I prefer my browser just displaying websites and not doing random AI stuff or telemetry.

Visually impaired people prefer being able to use the browser and actually be able to understand the content of websites.

These features are local, private and improve accessibility, so I really don’t see any similarities with telemetry which can be turned off anyway.

A well-implemented language model could be a huge QOL improvement. The fact that 90% of AI implementations are half-assed ChatGPT frontends does not reflect the utility of the models themselves; it only reflects the lack of vision and haste to ship of most companies.

Arc Browser has some interesting AI features, but since they’re shipping everything to OpenAI for processing, it’s a non-starter for me. It also means the developers’ interests are not aligned with my own, since they are paying by the token for API access. Mozilla is going to run local LLMs, so everything will remain private, and limited only by my own hardware and my own configuration choices. I’m down with that.

I’d love to see Firefox auto-fetch results from web searches and summarize them for me, bypassing clickbait, filler, etc. You’ve probably seen AI summary bots here on Lemmy, and I find them very helpful at cutting the crap and giving me exactly what I want, which is information in text form. That’s something that’s harder and harder to get from web sites nowadays. Never see a recipe writer’s life story again!

Don’t be ridiculous…. Now an AI powered bidet that really gets the shit off your butthole…

I’m happy about 2/3 of these things

there are 4 things

I’m happy about 3/4 of these things

Why do you hate vertical tabs so much, mate?

They shouldn’t have announced until it was ready for release.

I just bought my first ultrawide monitor and was just getting into testing the available options for vertical tabs, but I don’t like having them duplicated, so I was just thinking that this should be a native feature.

And now I can’t wait.

Will this fix tabs not being draggable into new or other windows in some Wayland setups?

Yes, I mean that’s one of the points explicitly listed, no?

Yes, I mean that’s one of the points explicitly listed, no?

The only mention of Wayland I can find on the linked page is a comment mentioning issues with Wayland in Chromium.

deleted by creator

It’s local. You’re not sending data to their servers.

We’re looking at how we can use local, on-device AI models – i.e., more private – to enhance your browsing experience further. One feature we’re starting with next quarter is AI-generated alt-text for images inserted into PDFs, which makes it more accessible to visually impaired users and people with learning disabilities. The alt text is then processed on your device and saved locally instead of cloud services, ensuring that enhancements like these are done with your privacy in mind.

At least use the whole quote.

yeah, of course its gonna look like its not local if you take out the part where it says its local

That’s somewhat awkward phrasing but I think the visual processing will also be done on-device. There are a few small multimodal models out there. Mozilla’s llamafile project includes multimodal support, so you can query a language model about the contents of an image.

Even just a few months ago I would have thought this was not viable, but the newer models are game-changingly good at very small sizes. Small enough to run on any decent laptop or even a phone.

Ugh. I hate tabs on mobile. Had to trash my homepage settings with chrome to stop it loading a new tab everytime i opened the app. Firefox is bloated enough w/o the tab bullshit

Don’t use it then

There are no tabs on mobile anyways. Only an openable list of currently open websites.